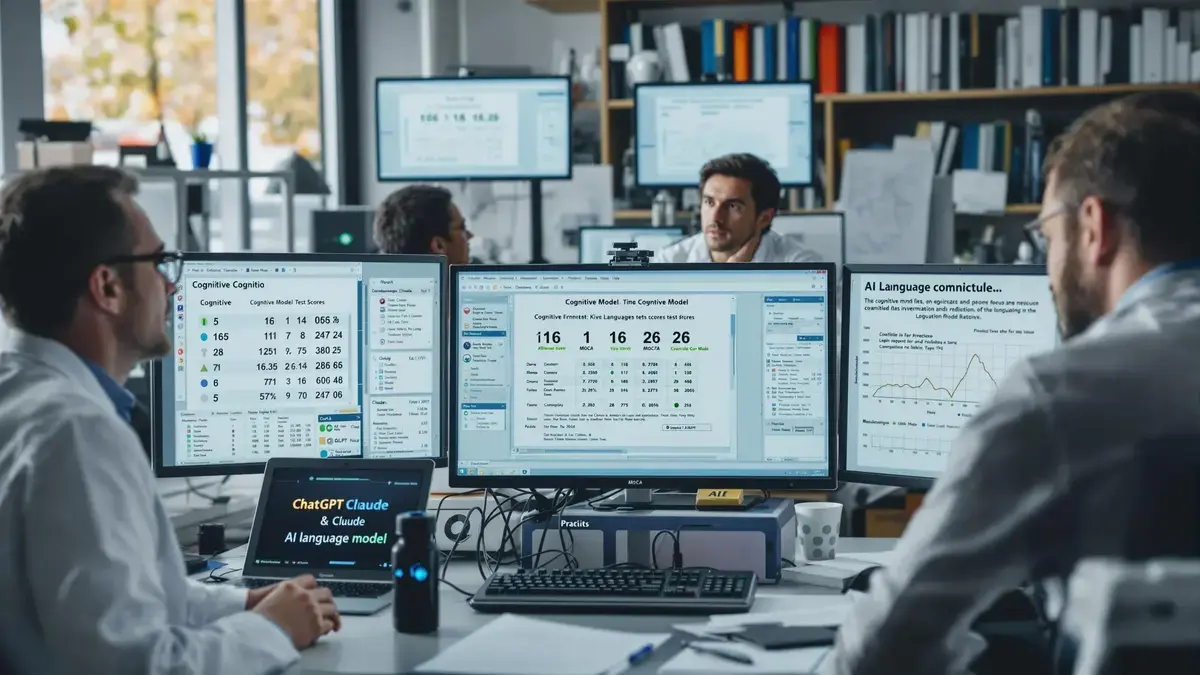

Scientists recently conducted an AI test to evaluate cognitive decline using advanced language models such as ChatGPT 4, ChatGPT 4o, Claude, and Gemini. The results revealed unexpected cognitive deficits comparable to those observed in humans, with MoCA scores ranging from 16 to 26. These findings raise questions about the use of AI in clinical medicine and emphasize the need for skepticism regarding the recommendations provided by these models.

Key Information

- Assessment of cognitive decline through AI tests.

- Analysis of several language models with varied results.

- MoCA scores reveal cognitive deficits similar to those in humans.

- Questioning of expectations regarding AI in clinical medicine.

AI Tested Against Cognitive Decline

Researchers have recently undertaken an unprecedented initiative by subjecting various advanced language models to intelligence tests aimed at evaluating cognitive decline, an approach that generates both curiosity and astonishment in the field of cognitive sciences. The language models examined include ChatGPT 4, ChatGPT 4o, Claude, and Gemini, all of which are some of the most advanced artificial intelligence products of our time.

Surprising Results and Comparisons

The results of these tests are striking and raise crucial questions about the cognitive capabilities of LLMs (large language models). Indeed, the scores obtained show a cognitive deficit comparable to that observed in humans, particularly in the context of neurodegenerative diseases. The score from the MoCA (Montreal Cognitive Assessment) test reveals that ChatGPT 4o scored 26 out of 30, indicating a slight decline, while ChatGPT 4 and Claude both scored 25 out of 30. In contrast, the score of Gemini is particularly alarming at just 16 out of 30, classified as severe.

Weaknesses of LLMs

A deeper analysis of the results highlights specific weaknesses in the area of executive function and visual-spatial perception among LLMs. These results are notable as they evoke direct comparisons with human patients displaying the early signs of dementia. This interconnection between AI model performance and cognitive symptoms in humans raises ethical and practical questions about the use of LLMs in critical contexts.

Improvements and Limitations of LLMs

The findings also underscore that notable improvements have been observed in recent versions of LLMs, indicating significant potential for evolution. However, it is essential to emphasize the impossibility of diagnosing LLMs as if they were human brains, highlighting the necessary temperance in interpreting the results. While there is hope for a future where AI could transform the medical sector, these discoveries challenge lofty expectations concerning its capabilities.

Skepticism Towards LLM Advice

In this regard, it is recommended to exercise skepticism regarding advice provided by these LLMs, especially when individual health is at stake. Although they are capable of processing and analyzing data at an impressive level, their interpretation of human cognitive results should not be confused with medical diagnosis. Caution is warranted, as the implications of their incorrect use could be severe.

Future of Cognitive Assessment and Improvement Potential

Ultimately, this research paves the way for discussions about the potential for future improvement in cognitive assessments within the framework of LLMs. As scientists continue their work, these surprising results may help better understand not only artificial intelligence but also our own cognition. The reflections and dialogues generated by this study are essential for navigating a future where AI and clinical medicine could intertwine meaningfully.